In the FastMCP 2.0 release post, I highlighted how the project’s focus has evolved from simply creating MCP servers in 1.0, to making it easier to work with the growing ecosystem in 2.0. MCP composition addresses various ways of combining your MCP servers. But what about integrating with servers you don’t control, like remote services, third-party tools, or servers using different transports?

This is where proxying comes in. It’s a core piece of the FastMCP 2.0 vision, enabling seamless interaction across the diverse landscape of MCP servers.

What is an MCP Proxy?

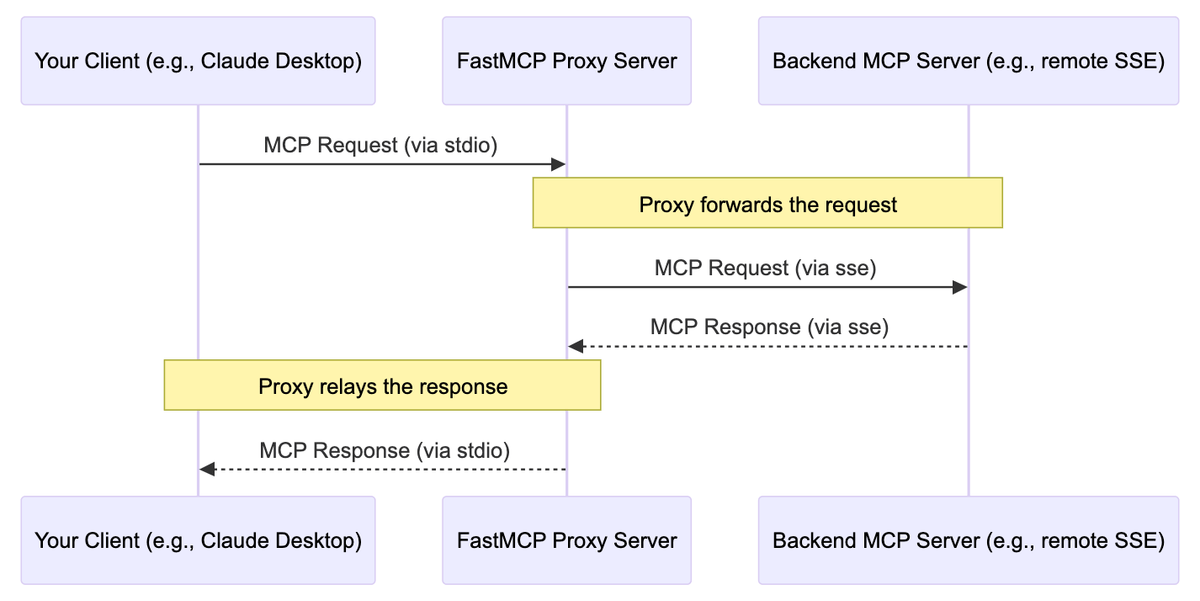

A FastMCP proxy acts as an intermediary, a kind of universal travel adapter. You run a FastMCP server instance, but instead of implementing its own logic, it forwards requests to a designated backend MCP server.

Here’s the flow:

- Your client application sends a request (e.g.,

call tool XYZ) to the local FastMCP proxy. - The proxy receives this request and forwards it to the configured backend server (which could be anywhere, using any transport).

- The backend server processes the request and sends its response back to the proxy.

- The proxy relays this response to your original client.

To the client, it looks like a standard FastMCP interaction. Under the hood, the proxy handles the communication translation.

This capability might seem simple, but it solves several practical challenges in building and using MCP-based systems:

-

Transport Bridging: This is fundamental. Many powerful MCP servers might only be available via network transports like

sseorwebsocket. However, local clients like Claude Desktop often expect to communicate viastdio. A FastMCP proxy running locally can bridge this gap, listening onstdiowhile communicating with the backend viasse(or vice-versa, or any other combination). This instantly makes remote or differently-transported servers accessible to local tools. -

Interaction Simplification: Instead of managing connections to numerous backend servers with potentially different addresses and transports, applications can interact with a single, local proxy endpoint. The proxy handles the complexity of routing requests to the appropriate backend, streamlining client configuration.

-

Gateway Functionality: A proxy can serve as a controlled entry point to backend services. While base proxying forwards requests directly, the underlying

FastMCPProxyclass could be subclassed (for advanced users) to inject logic like request logging, caching, authentication checks, or even basic request/response modification, creating a more robust gateway. -

Decoupling: Proxies decouple the client’s required transport from the backend server’s implementation. The backend server can change its transport or location, and only the proxy configuration needs updating, not every client application.

Creating a Proxy

FastMCP makes creating a proxy straightforward using the FastMCP.from_client() class method. It leverages the standard fastmcp.Client to define the connection to the backend.

from fastmcp import FastMCP, Client

# 1. Configure a client for the backend server.# This target could be anything the Client can connect to:# - Remote SSE/WebSocket URL: Client("http://api.example.com/mcp/sse")# - Local Python script: Client("path/to/backend_server.py")# - Another FastMCP instance: Client(another_mcp_instance)backend_client = Client("http://api.example.com/mcp/sse")

# 2. Create the proxy server instance from the client.proxy_server = FastMCP.from_client( backend_client, name="MySmartProxy")

# 3. Run the proxy server (defaults to stdio)if __name__ == "__main__": proxy_server.run()

# You could run it on SSE instead if needed: # proxy_server.run(transport="sse", port=9001)When you call FastMCP.from_client(), it doesn’t discover the backend components immediately. Instead, it stores the provided backend_client within the proxy_server instance. When the proxy server later receives a request (like list_tools or call_tool), it dynamically uses the stored backend_client at that moment to forward the request to the backend and relay the response. This ensures the proxy always reflects the current state of the backend server. The result is a standard FastMCP instance, ready to run.

Proxies Love Composition

Crucially, because FastMCP.from_client() yields a standard FastMCP instance, these proxies integrate perfectly with FastMCP 2.0’s composition model. You can call mount() or import_server() to compose a proxy server alongside other servers, just like any other FastMCP server.

from fastmcp import FastMCP, Client

main_app = FastMCP(name="CombinedApp")

@main_app.tool()def local_utility(): return "This tool runs directly in the main app."

# Assume proxy_server is created as shown beforeproxy_server = FastMCP.from_client(...)

# Mount the proxy server instance under a prefixmain_app.mount("proxied_service", proxy_server)

# The main_app now exposes:# - "local_utility" (its own tool)# - "proxied_service_<backend_tool_name>" (tools from the backend via the proxy)# - "proxied_service+<backend_resource_uri>" (resources from the backend via the proxy)

if __name__ == "__main__": main_app.run()This allows you to build sophisticated applications where a single FastMCP server acts as a unified interface to both local functionality and multiple remote or diverse backend MCP services.

Wrapping Up

Proxying is essential plumbing for a truly interoperable MCP ecosystem. FastMCP 2.0 makes it trivial to bridge transports, simplify client interactions, and integrate disparate MCP servers. By treating proxies as first-class FastMCP servers, we unlock flexible and powerful architectural patterns.

If you haven’t already, explore the proxying capability – it might just be the missing piece for connecting your AI workflows. Check out the proxying docs for further details.

Happy Connecting! 🔌